Second paper published in Radiology

Authors

Eun Kyoung Hong¹, Jiyeon Ham², Byungseok Roh², Jawook Gu³, Beomhee Park², Sunghun Kang², Kihyun You³, Juhwan Eom², Byeonguk Bae², Jae-Bock Jo³, Ok Kyu Song², Woong Bae³, Ro Woon Lee⁴, Chong Hyun Suh⁵, Chan Ho Park⁶, Seong Jun Choi⁶, Jai Soung Park⁶, Jae-Hyeong Park⁷, Hyun Jeong Jeon⁸, Jeong-Ho Hong⁹, Dosang Cho¹⁰, Han Seok Choi¹¹, Tae Hee Kim¹²

¹ Department of Radiology, Brigham & Women’s Hospital, 75 Francis St, Boston, MA 02215

² Kakao, Seoul, South Korea

³ Soombit.ai, Seoul, South Korea

⁴ Inha University, Incheon, South Korea

⁵ Asan Medical Center, Seoul, South Korea

⁶ College of Medicine, Soonchunhyang University, Cheonan, South Korea

⁷ College of Medicine, Chungnam National University, Daejun, South Korea

⁸ College of Medicine, Chungbuk National University, Cheongju, South Korea

⁹ School of Medicine, Keimyung University, Daegu, South Korea

¹⁰ College of Medicine, Ewha Womans University, Seoul, South Korea

¹¹ College of Medicine, Dongguk University, Goyang, South Korea

¹² School of Medicine, Ajou University, Suwon, South Korea

Abstract

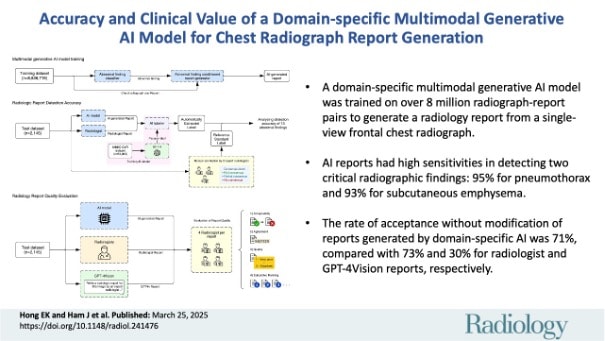

Use of a multimodal generative artificial intelligence model increased the efficiency and quality of chest radiograph interpretations by reducing reading times and increasing report accuracy and agreement.

Published in Radiology, 2025

https://doi.org/10.1148/radiol.241476